The iconic computerized voice of Stephen Hawking in the later years of his life came from an advanced communication system he used to express himself as his Amyotrophic Lateral Sclerosis (ALS) progressed.

Through slight movements of his cheek, Hawking selected the words he wanted to say on a screen, which were then translated into speech.

Now, research scientists at Tec de Monterrey’s Guadalajara campus are working on new solutions for people who, like Hawking, are unable to talk. They are testing a neurological decoder that reads brain signals and instantly translates them into speech.

“What we want is for patients to be able to communicate verbally by using a brain-computer interface, without invasive surgeries or chips implanted in the brain,” explains Mauricio Antelis, leader of the Tec’s Laboratory of Neurotechnology and Brain-Computer Interfaces.

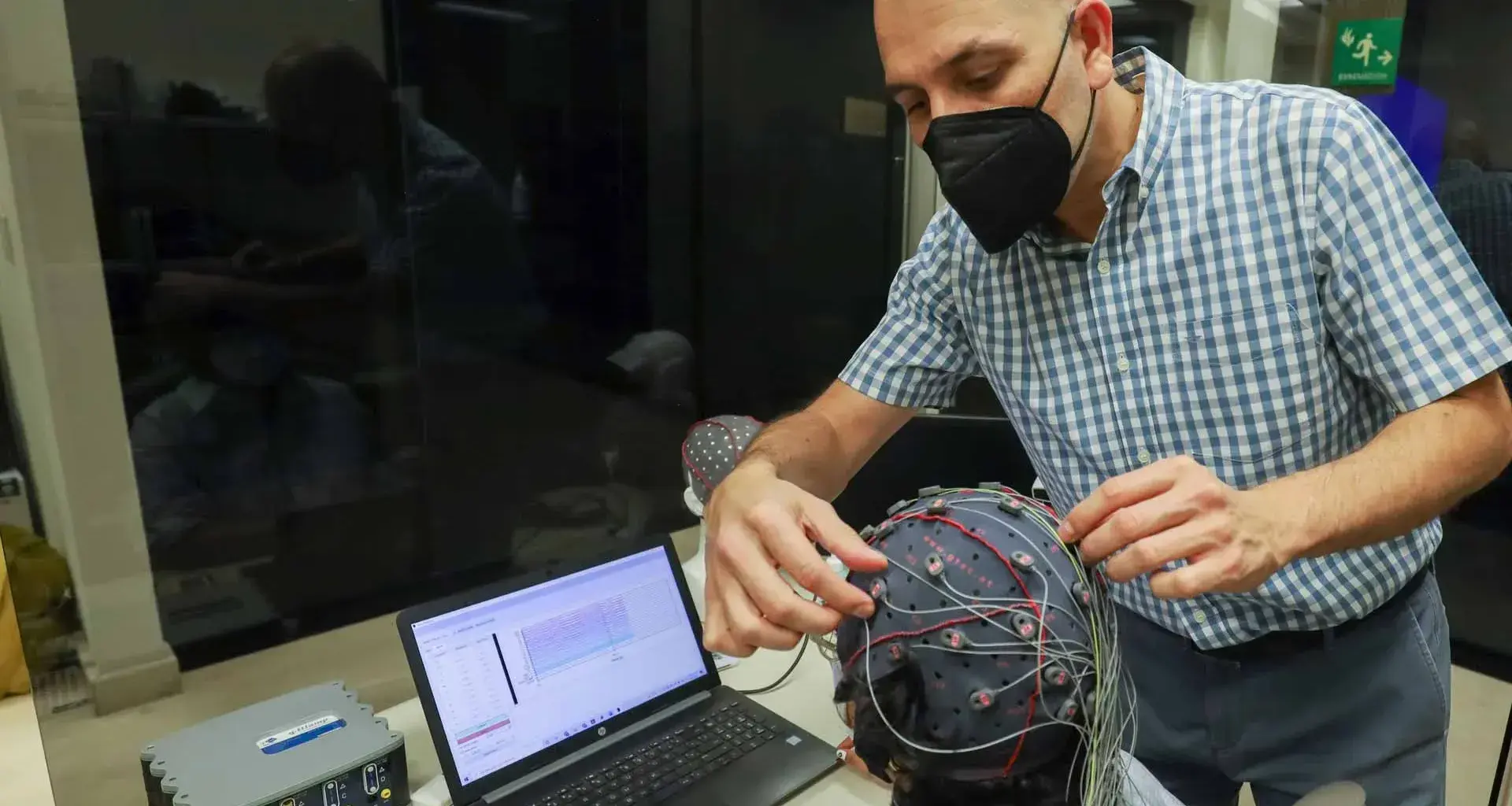

The researchers have been analyzing healthy people in the laboratory to detect signals from their brains and muscles as well as recording what they say in everyday situations such as asking for food or water.

They place a kind of cap on them with small metal discs containing electrodes, which go on the scalp and certain facial muscles.

“The volunteers pronounce or think of words such as ‘yes, no, water, food, or sleep,’” adds Denisse Alonso, who is a researcher on the team and a Ph.D. student at the Tec.

This information is decoded by Machine Learning computational algorithms designed at the Tec, which are being programmed to reproduce the words as audio.

Mentally controlling “robot hands” or wheelchairs

Their laboratory research includes finding a way to tell a wheelchair to do an “emergency stop” and also includes practical devices that are being tested in hospitals.

For instance, patients at TecSalud’s ALS Multidisciplinary Clinic at the Zambrano Hellion Hospital in Monterrey are attempting to regain their mobility through a robot hand that utilizes a brain-computer interface.

“Patients make a visual selection from six options on a screen, which correspond to the five fingers and the whole hand. When we detect a brain signal, the robot performs the corresponding movement,” explains Antelis.

In the end, this ability to listen to brain activity, understand it, and act upon it has the potential to improve people’s lives. That, concludes Professor Antelis, is what it’s all about.

READ MORE: